What makes a system truly alive? Not perfection, but the ability to stumble, sense, and adapt—to learn from error, and even to evolve the way it learns.

Introduction: The Power of Mistake

A child learns to walk not by following perfect instructions, but by falling and correcting.

Ecosystems recover from disaster by reshuffling their patterns.

Markets, brains, and even AI systems adapt—not by never failing, but by using failure as fuel for new strategies.

In static models, errors are nuisances—things to minimize or ignore.

In DAM X, error is information. It is the driving force for growth, recalibration, and transformation.

Learning as a Living Process

Learning in DAM X is not a one-time fit to past data.

Instead, it is a continuous process, always happening, always changing.

- The model measures its own performance at each time step.

- It tunes its adaptation rates, connection strengths, and even learning rules in response to feedback.

- Error is not just a gap; it is a signal.

Error-Driven Update: The Core Loop

At every step, DAM X compares what it expected to what actually happened.

Mathematical Representation

Let ytrue(t) be the real observation at time t, and ypred(t) be the model’s prediction.

Error:

e(t)=ytrue(t)−ypred(t)

Learning Rate Update (example):

αnew=αold+η⋅∣e(t)∣

where η controls how much error affects adaptation.

Connection Strength Adjustment:

θij(t+1)=θij(t)+γ⋅e(t)⋅influenceij(t)

where γ is a scaling factor.

When errors are large, the system learns faster; when errors are small, it stabilizes.

Online and Continual Learning

Most traditional models are trained once, then deployed—doomed to obsolescence as the world shifts.

DAM X is built for online learning:

- Incremental updates: The model refines its knowledge as each new data point arrives.

- Concept drift detection: If the pattern of errors changes, the system recognizes that the world has shifted and recalibrates.

- Meta-learning: Not only do parameters adapt, but the rules of adaptation themselves evolve over time.

Example: ADWIN Algorithm for Concept Drift

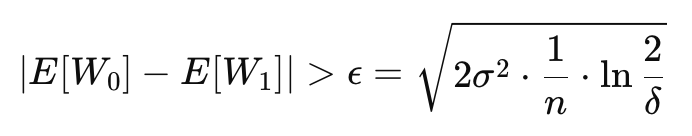

ADWIN uses a sliding window to detect when the average error changes significantly:

If true, the model adapts more aggressively.

From Local to Global Learning

- Local learning: Each entity can adapt its own parameters based on its unique error signal.

- Global adaptation: If a systemic shock is detected (many entities see large errors), the entire network, learning rules, or even goals can update.

Practical Example: Real-Time Health Monitoring

Imagine a wearable device using DAM X to monitor a patient’s heart rate:

- Small deviations trigger mild adjustments—maybe tuning sensitivity.

- Sudden, sustained errors (e.g., a dramatic shift in heart rhythm) signal the device to escalate, alert caregivers, or switch to a new detection mode.

This continual feedback keeps the system both stable and agile—resistant to noise, but responsive to real change.

Sample Code: Error-Driven Learning Rate Adjustment

python

def update_learning_rate(alpha, error, eta=0.01):

return alpha + eta * abs(error)

# Example usage in online loop

for t in range(len(data)):

prediction = model.predict(data[t])

error = data[t] - prediction

model.alpha = update_learning_rate(model.alpha, error)

model.update(data[t], model.alpha)Why Adaptive Learning Matters for Living Models

Perfection is brittle; adaptability is robust.

DAM X embraces error as an opportunity:

- To learn faster when the world is new.

- To stabilize when things are calm.

- To transform even its own learning process as surprises emerge.

To model life, we must let models learn—again and again, forever changing in the light of error.